For fast data retrieval, cache reigns supreme. If you’re curious about how Elasticsearch uses a multitude of caches to ascertain you get data as soon as possible by reading this blog. Elasticsearch’s caching characteristics will help you retrieve data more easily thereafter primary data entries, as explained in this blog. Get this online course Elasticsearch training where you will be comprehending various concepts of Elasticsearch which comprises Elasticsearch clusters configuration, Analyzers insights, the internal working of Elasticsearch, aggregations, mappings, queries, etc. Elasticsearch makes extensive use of a variety of caches, however, in this study, we’ll only look at:

- Request cache at the shard level.

- Page cache (Seldom known as the cache of a filesystem).

- Query cache.

Elasticsearch: One cache at a time, boosting query speed

Page cache

The page cache’s basic concept is to read data from the disc and store it in usable memory such that the memory would be used to return the next read without the need for a disc seek. The program, which is sending relatively similar code calls, comprehends a lot of this. However, instead of reading from a disc, the operating system would make use of the page cache.

Consider the following diagram, in which the program is making a code a request for data reading from a disc, and the operating/kernel system reads the data from the disc for the first time and stores it in the cache of a page in memory. The kernel will then relocate the second read to the cache of a page inside the memory of an operating system, making it even quicker.

What can this imply for Elasticsearch as a whole? Instead of retrieving data from the disc, you can make use of the cache of a page to retrieve data even more quickly. Elasticsearch memory does not exceed half of the total usable memory for many reasons, one of which is that the remaining half should be allocated for the cache of a page. It even assures that There is no wastage of memory; instead, it is put to good use by the page cache.

Page cache testing

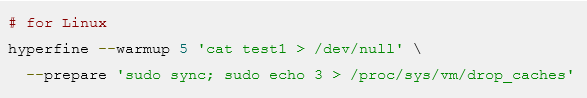

If you’d like to test the usability of the cache of a page, you should use hyperfine. The hyperfine is a command-line benchmarking application. Let’s use dd to make a 10-megabyte disc.

dd if=/dev/urandom of= test1 bs=1M count=10

If you’d like to execute the above on macOS, you can use gdd and make sure coreutils is configured with brew.

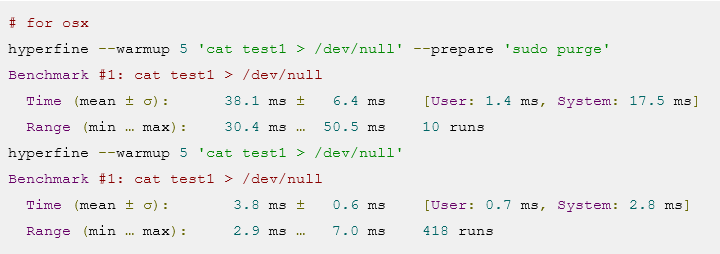

As a result, executing the same cat command on this local instance of macOS without the need for clearing the cache of a page is around 10 times faster, as disc access is avoided. For the data in Elasticsearch, you certainly want this type of access pattern!

Read More:- AWS ubuntu setup || launch an AWS EC2 Server

Request cache at the shard level

By caching only aggregation-based search responses, this cache helps in Kibana’s acceleration. Let’s envision the issue that this cache solves by overlaying the acknowledgment of aggregation with data retrieved from multiple indices.

In your workplace, a dashboard of Kibana usually shows data from multiple indexes, and you basically decide a timeframe, such as the last seven days. The number of shards or indices queried is irrelevant. If you use data streams to build time-based indexes, you could probably wind up with something like this.

Let’s fast forward 3 hours to see what the dashboard looks like now:

The second visual is somewhat relevant to the first, with the exception that some data has out of reach and is no longer visible (the blue line to the left), and the black line indicates that more data has been added at the end. What hasn’t changed, do you think? The logs-000003, logs-000004, and logs-000002 indices returned data.

And if the data had been cached on the page, the aggregation and search would have to be done on top of the results. There’s no reason to perform this extra job. Elasticsearch has undergone another optimization applied in order to make this work: being able to rewrite a query. Rather than providing a timestamp logs’ collection indexes logs-000003, logs-000004, and logs-000002, this can be rewritten as a query “match_all” so every document inside that index matches the timestamp (Of course, other filters would apply). All requests are now treated as the same request on all three indexes, allowing them to be cached.

This is now the request cache at the shard level. The aim is to cache the whole response to a request so that you don’t have to do any searches and can send back the response almost immediately — to avoid returning stale data, as long as the data hasn’t changed!

Read More: – Content Marketing Made Simple: A Step-by-Step Guide

Query cache

In this section, we’ll look at the query cache. This cache operates in somewhat unique ways to the others. The cache of a page stores data regardless of how often it has already been read in response to a query. When a similar query is used, the query cache at the shard level caches the data. The cache of queries is much more granular, allowing data to be cached that is replicated through any queries.

Let’s look at how this functions. Assume we’re searching at logs. This month’s data could be viewed by three distinct users. Each consumer, however, employs a distinct term for search:

- The User1 explores for phrase “failure”

- The User2 explores for phrase “Exception”

- The User3 explores for phrase “pcre2_get_error_message”

Each quest yields different results, despite the fact that they all occur during an identical time frame. The query cache comes into play at this point: it will cache only the portion of a query that is being cached. The basic concept is to cache data that is written to disc and then only scan in those items. This is most likely the format of your query:

Each query’s filter section is the same. With an inverted index, this is a very basic representation of the data. A record id is associated with each timestamp.

So, how could this be made more efficient and reused in subsequent queries? Bit sets (also known as bit arrays) are helpful in this regard. A bit collection is an array of bits, each of which signifies a document. For this specific @timestamp filter, we can build a steadfast bit set that covers a month. A 0 indicates that the text is not in the set, while a 1 indicates that it is. The corresponding bit set will be as follows:

Set this bit on a per-segment basis after you’ve developed it (After a merge or if a new section is created, it will have to be recreated.), Before ever running the filter, excluding four records, the next query does not require any disc access. There are a few amusing properties of bit sets. They should be blended first. You can quickly work out which records have all bits set if you have two-bit sets and two filters, or you can combine an OR query. Compression is another fascinating feature of bit sets.

Conclusion:

In this session, you have witnessed to comprehend the concepts of elastic search like page cache in utilizing the memory access, validating the page caches by applying the commands, returning responses with shared level request caches, and queries cache to retrieve the search results.